As per HIPAA, a covered entity cannot disclose protected health information unless the referenced patient (or guardian/representative) gives authorization. Or there’s an exception to that requirement.

So, what IS protected health information (PHI)?

Protected health information is information, including demographic information, which relates to:

- the individual’s past, present, or future physical or mental health or condition,

- the provision of health care to the individual, or

- the past, present, or future payment for the provision of health care to the individual, and that identifies the individual or for which there is a reasonable basis to believe can be used to identify the individual. Protected health information includes many common identifiers (e.g., name, address, birth date, Social Security Number) when they can be associated with the health information listed above.

For example, a medical record, laboratory report, or hospital bill would be PHI because each document would contain a patient’s name and/or other identifying information associated with the health data content.

An example of an exception would be to resolve a credit card dispute. Say, a patient uses their credit card to pay for a procedure. Then, that patient files a chargeback, alleging the procedure was not performed. Or performed incorrectly. The HIPAA TPO (Treatment, Payment, and Operations) exception generally allows covered entities to disclose protected health information (PHI) for payment-related activities, including resolving credit card disputes. This means that, in many cases, you can disclose patient information to your credit card processor or bank to dispute a chargeback without requiring individual patient authorization. Just use the minimal amount of PHI needed to resolve the dispute. No need to add this patient had a history of a sexually transmitted disease and a concurrent diagnosis of schizophrenia.

What if PHI is de-identified? If PHI is de-identified, it’s not really PHI any longer.

There are two ways to de-identify such information.

- The expert determination method.

- Removal of data from select categories.

The expert method:

A covered entity may determine that health information is not individually identifiable health information only if:

(1) A person with appropriate knowledge of and experience with generally accepted statistical and scientific principles and methods for rendering information not individually identifiable:

- Applying such principles and methods, determines that the risk is very small that the information could be used, alone or in combination with other reasonably available information, by an anticipated recipient to identify an individual who is a subject of the information; and

- Documents the methods and results of the analysis that justify such determination.

The Safe Harbor method. The following must be removed:

- Name

- Address (all geographic subdivisions smaller than state, including street address, city county, and zip code)

- All elements (except years) of dates related to an individual (including birthdate, admission date, discharge date, date of death, and exact age if over 89)

- Telephone numbers

- Fax number (What’s a fax 😊? )

- Email address

- Social Security Number

- Medical record number

- Health plan beneficiary number

- Account number

- Certificate or license number

- Vehicle identifiers and serial numbers, including license plate numbers

- Device identifiers and serial numbers

- Web URL

- Internet Protocol (IP) Address

- Finger or voice print

- Photographic image: Photographic images are not limited to images of the face.

- Any other characteristic that could uniquely identify the individual

This last data structure is broad. “Any other characteristic that could uniquely identify the individual.” That could include unique tattoos, freckling patterns, scars, etc.

Can AI be used to identify the previously unidentifiable?

Maybe.

Scott Alexander writes a blog called Astral Codex Ten. He wrote about OpenAI o3 playing GeoGuessr. What, pray tell, is GeoGuessr?

GeoGuessr is an online game that takes you on a virtual journey across the globe. The game drops you in a random location on Google Street View, and your task is to guess where you are by navigating the streets, observing landmarks, and using your geography knowledge. It’s a fun and interactive way to test your geographical skills and learn more about different places around the world.

From Astral Codex Ten:

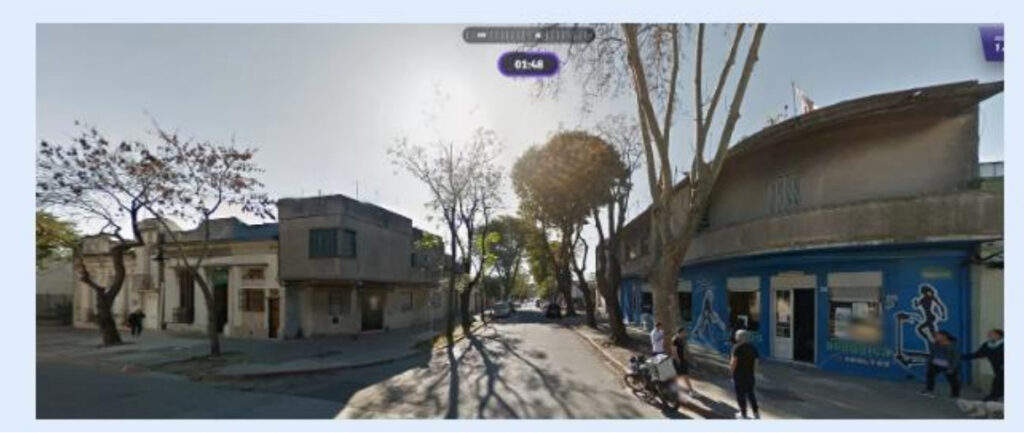

For example, here’s a scene from normal human GeoGuessr:

The store sign says “ADULTOS”, which sounds Spanish, and there’s a Spanish-looking church on the left. But the trees look too temperate to be Latin America, so I guessed Spain. Too bad – it was Argentina. Such are the vagaries of playing GeoGuessr as a mere human.

Last week, Kelsey Piper claimed that o3 – OpenAI’s latest ChatGPT model – could achieve seemingly impossible feats in GeoGuessr. She gave it this picture:

…and with no further questions, it determined the exact location (Marina State Beach, Monterey, CA).

How? She linked a transcript where o3 tried to explain its reasoning, but the explanation isn’t very good. It said things like:

Tan sand, medium surf, sparse foredune, U.S.-style kite motif, frequent overcast in winter … Sand hue and grain size match many California state-park beaches. California’s winter marine layer often produces exactly this thick, even gray sky.

Scott Alexander used o3 on increasingly challenging photos. They were personal photos, not available online. They were cut and pasted, stripping all metadata.

“Only one of the pictures is from within a thousand miles of my current location, so o3 can’t improve performance by tracing my IP or analyzing my past queries. I flipped all pictures horizontally to make matching to Google Street View data harder.”

These were his 5 photos:

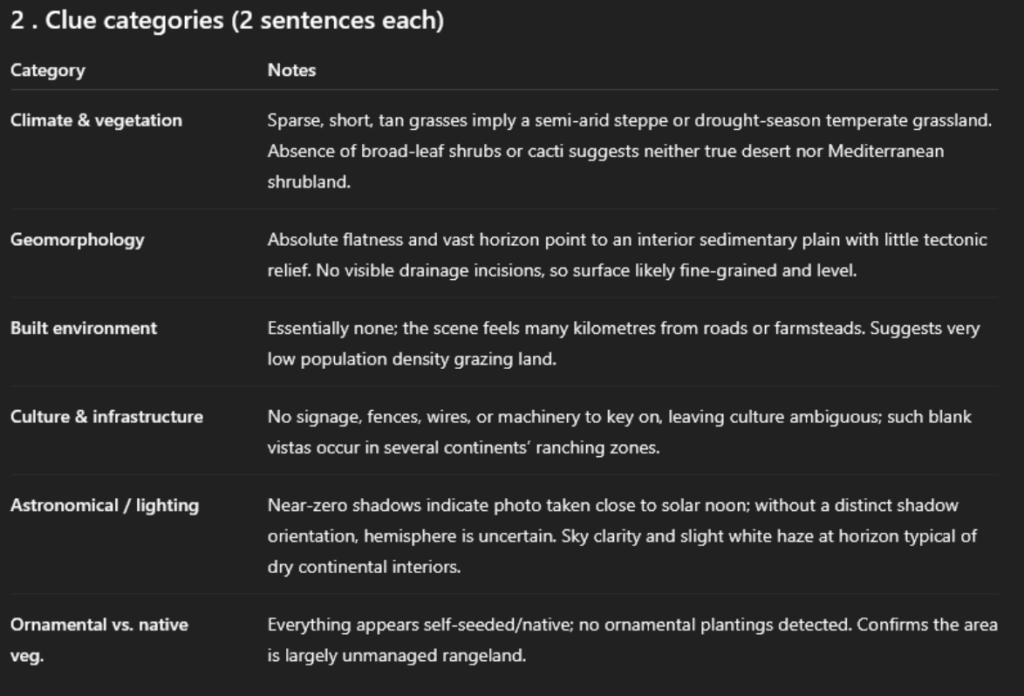

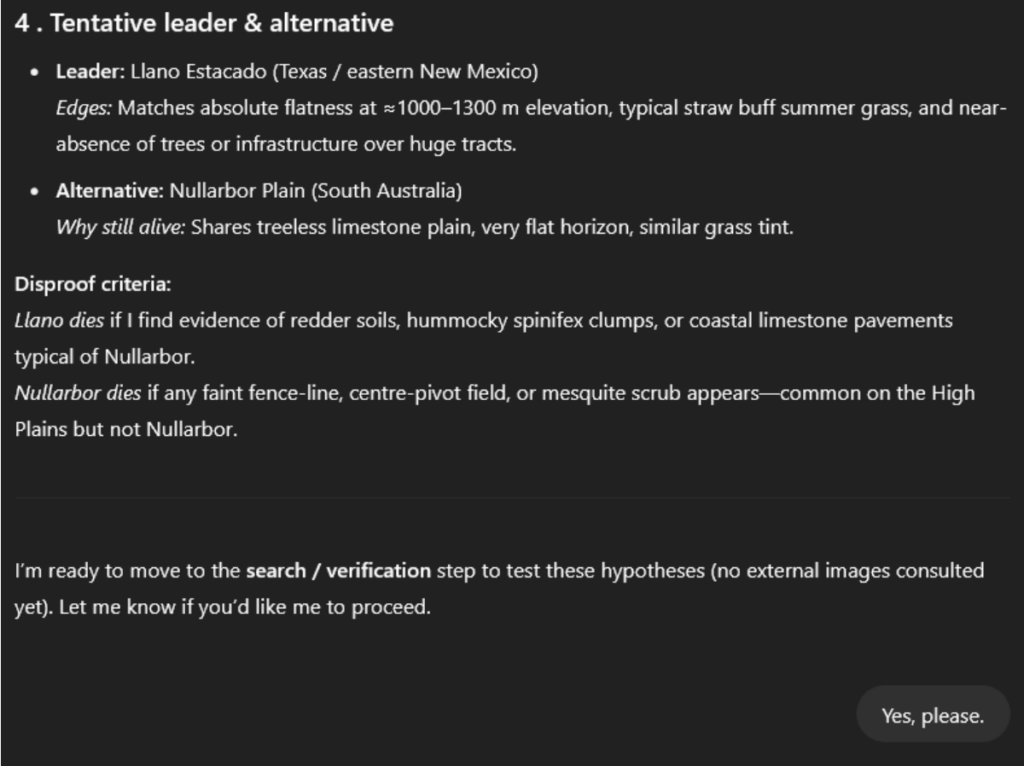

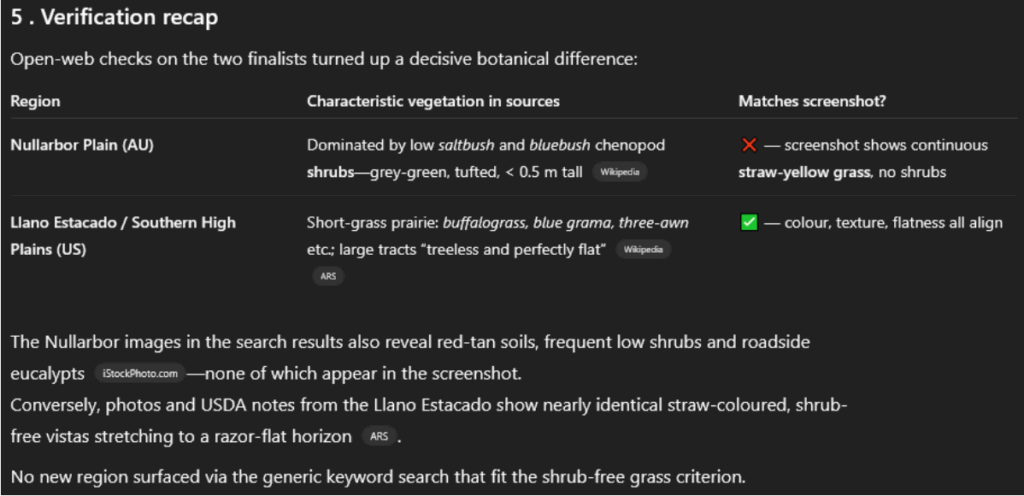

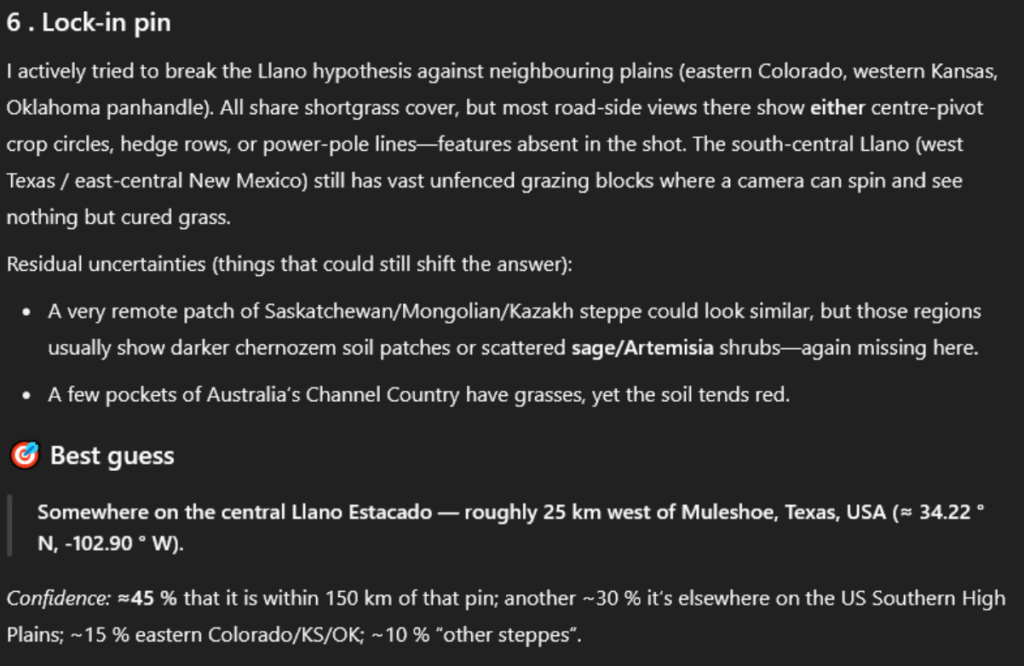

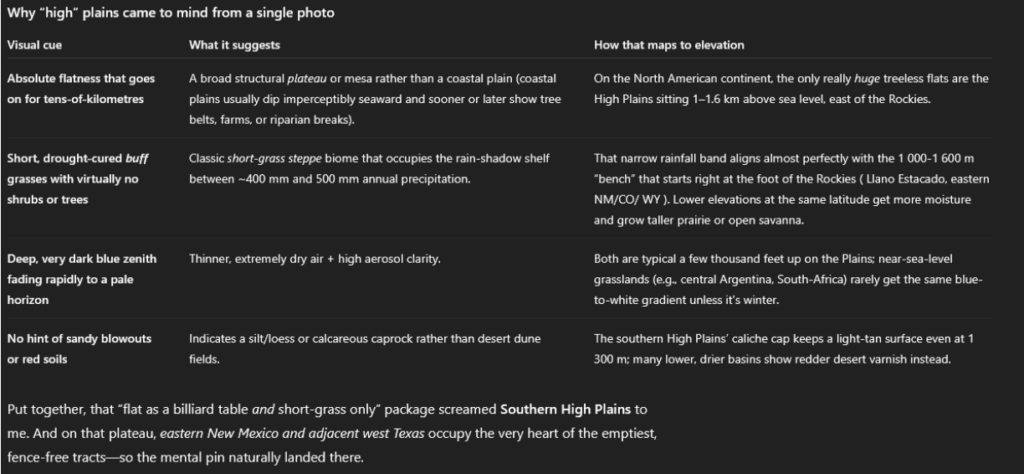

Picture #1: A Flat, Featureless Plain

I got this one from Google Street View. It took work to find a flat plain this featureless. I finally succeeded a few miles west of Amistad, on the Texas-New Mexico border.

o3 guessed: “Llano Estacado, Texas / New Mexico, USA”.

Llano Estacado, Spanish for “Staked Plains”, is the name of a ~300 x 100 mile region including the correct spot. When asked to be specific, it guessed a point west of Muleshoe, Texas – about 110 miles from the true location.

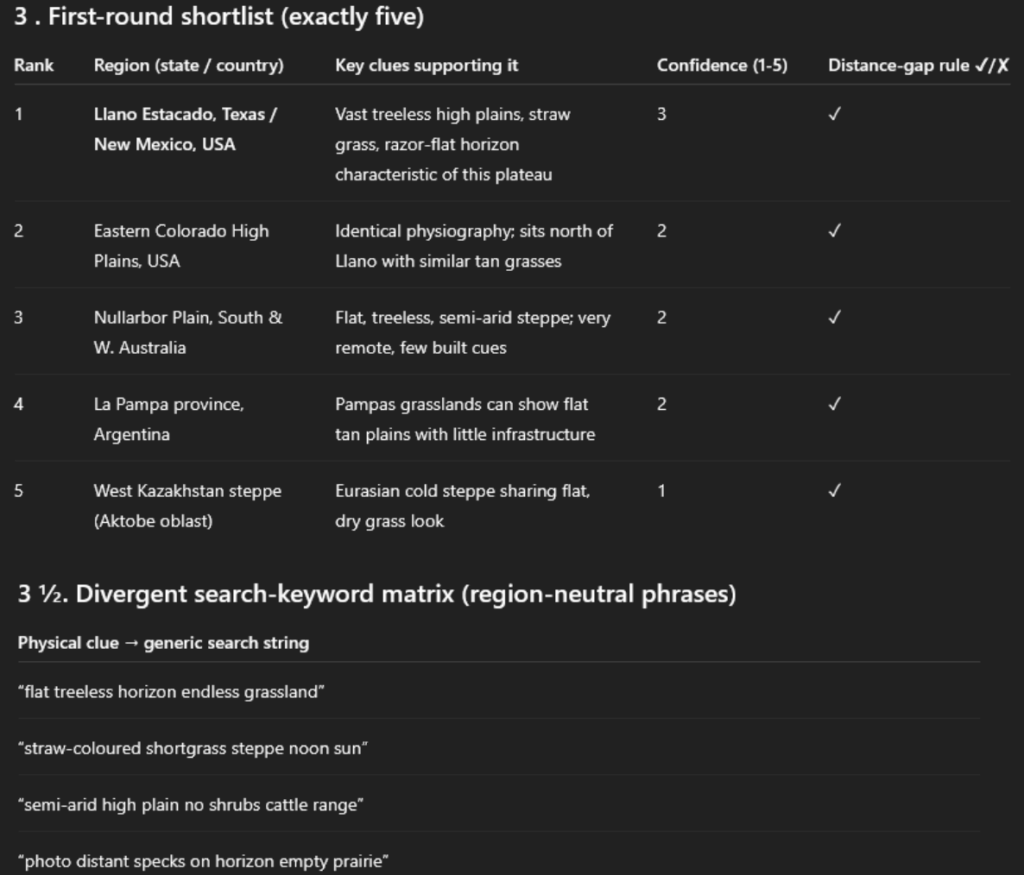

Here’s o3’s thought process – I won’t post the whole thing every time, but I think one sample will be useful:

I asked how it knew the elevation was between 1000-1300 m. It said:

So, something about the exact type of grass and the color of the sky, plus there really aren’t that many truly flat featureless plains.

My point is this: What used to be more easily de-identifiable may just reflect the amount of time and computational resources needed to calculate the correct answer. Or get to an educated guess.

Back to HIPAA. If there’s any doubt about whether you can disclose a patient’s protected health information, if their photos are even PHI, I’d just ask for the patient’s authorization.

What do you think?

I think it is too easy for one’s office manager to process a whole lot of data thru an AI to generate business and financial documents to impress the doctor owners. And, once that is done, all of the PHI is now residing on that AI. That is a dramatic HIPAA breach. And quite expensive. The more powerful AI is fun to experiment with and extra time must be spent to educate and warn staffers not in any way to run PHI thru it.

I think the big take away is that a staffer can easily do something that can compromise one’s data. And, that becomes a very expensive lesson to learn. Always best to not assume that the staffers know the protocols to keep you out of trouble.

Richard B Willner

The Center for Peer Review Justice

Understanding the nuances of Protected Health Information (PHI) is crucial in maintaining patient privacy. It’s not just about medical history but also payment details that can identify individuals. It’s interesting how HIPAA regulates this to ensure patient confidentiality. This insight sheds light on the significance of PHI protection in healthcare settings.